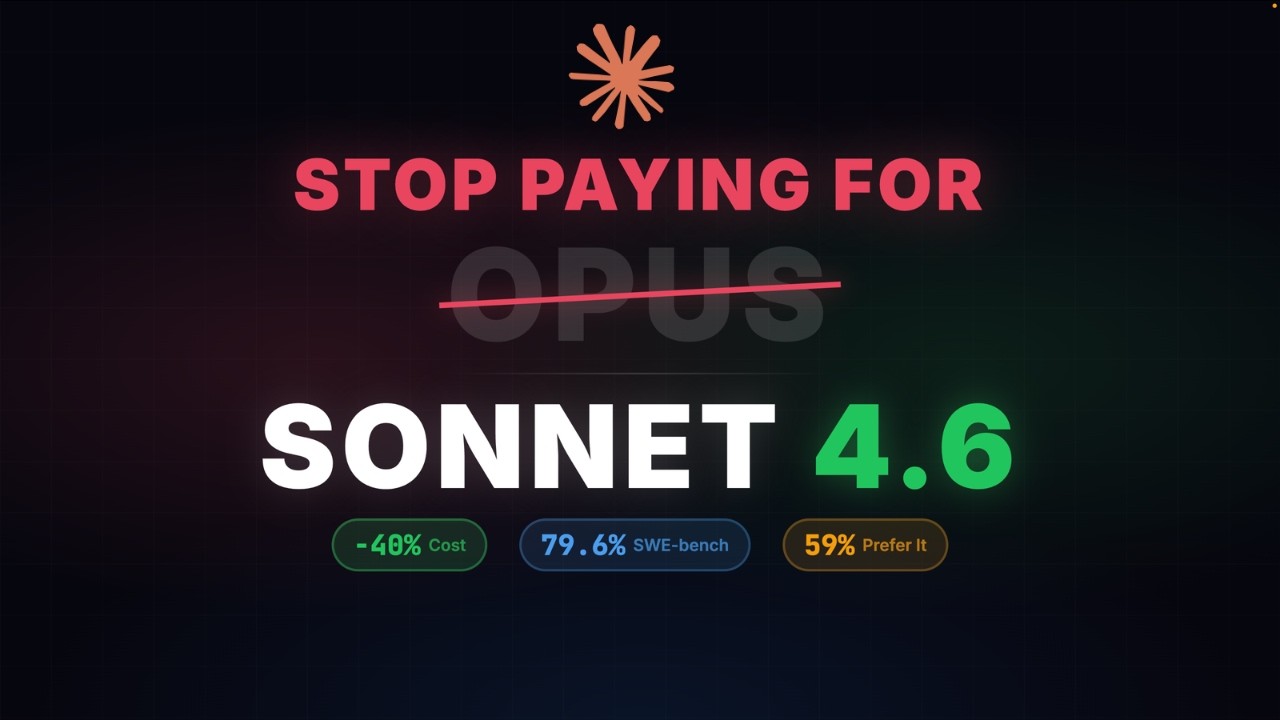

Stop Paying for Opus: Claude Sonnet 4.6 Changes Everything for Developers

On February 17th, Anthropic quietly released a model that should make every developer reconsider their API budget. Claude Sonnet 4.6 scores 79.6% on SWE-bench Verified. Claude Opus 4.6 — the model you're paying a premium for — scores 80.8%. That's a 1.2-point gap. For 40% less money.

Eric Simons, the CEO of Bolt, put it bluntly: Sonnet 4.6 is becoming their go-to model for the kind of deep codebase work that used to require more expensive models. And in Anthropic's own internal testing with Claude Code, 59% of developers actually preferred Sonnet 4.6 over the previous flagship, Opus 4.5.

In this article, I'll show you exactly what changed, the benchmarks that prove it, and the four code changes you need to make to save 40% on your API bill starting today.

The Three Problems Every Developer Was Dealing With

Before we get into what's new, let me describe the three problems that every developer using the Anthropic API has been living with.

Problem 1: Cost Bloat

If you were building anything serious — refactoring code, doing complex Q&A, running agents — the safe choice was always Opus. $5 per million input tokens, $25 per million output tokens. And most developers just defaulted to it for everything. Even simple bug fixes. Even quick questions. Because switching models mid-workflow was a headache.

Problem 2: Binary Thinking

Extended thinking in Sonnet 4.5 was an on-or-off switch. You either turned it on for every single API call (expensive) or turned it off and risked missing edge cases. There was no way for the model to decide: "Hey, this is a simple question, I don't need to burn tokens on deep reasoning." You had to guess. And you had to guess for every single call.

Problem 3: The Context Cliff

You're deep in a coding session. You've been going back and forth with Claude for an hour. Then you hit the 200,000 token wall. Your options? Either brutally truncate the conversation and lose critical context, or start a completely fresh session and lose everything. Both options break your flow. Both waste your time.

To summarize: we were overpaying, over-thinking, and constantly losing our place.

What Sonnet 4.6 Actually Changes

Sonnet 4.6 fixes all three of these problems. And it does it at the same price point as the old Sonnet: $3 input, $15 output per million tokens.

Fix 1: Near-Identical Quality for 40% Less

Look at the numbers:

| Benchmark | Sonnet 4.6 | Opus 4.6 | Gap |

|---|---|---|---|

| SWE-bench Verified | 79.6% | 80.8% | 1.2 pts |

| SWE-bench (prompt-modified) | 80.2% | 80.8% | 0.6 pts |

| OfficeQA | Matches | Baseline | Identical |

| OSWorld | 72.5% | 72.7% | 0.2 pts |

That SWE-bench gap is statistical noise. And with a prompt modification, Anthropic got Sonnet to 80.2%, making the gap just 0.6 points. On OfficeQA — which measures real-world office tasks — they're identical. Box reported that Sonnet 4.6 outperforms the previous Sonnet by 15 percentage points on heavy reasoning tasks.

Fix 2: Adaptive Thinking (type: "auto")

This is the feature I'm most excited about. Instead of a binary on-or-off toggle, you now pass type: "auto" in your thinking config. The model decides for itself how deeply to reason on each task.

Simple null pointer bug? Quick answer, low token cost. Complex race condition across three files? Deep reasoning kicks in automatically.

You stop guessing, and the model optimizes your costs for you.

Before (Sonnet 4.5):

response = client.messages.create(

model="claude-sonnet-4-5-20250929",

max_tokens=8000,

thinking={

"type": "enabled",

"budget_tokens": 10000 # Guessing every time

},

messages=messages

)After (Sonnet 4.6):

response = client.messages.create(

model="claude-sonnet-4-6",

max_tokens=8000,

thinking={

"type": "auto" # Model decides depth automatically

},

messages=messages

)Three lines down to one. The model handles the rest.

Fix 3: Context Compaction (Previously Opus-Only)

The API automatically summarizes older conversation context when you approach the token limit. Your session runs effectively forever. No manual truncation. No lost context.

response = client.beta.messages.create(

betas=["compact-2026-01-12"],

model="claude-sonnet-4-6",

max_tokens=4096,

messages=messages,

context_management={

"edits": [{

"type": "compact_20260112",

"trigger": {

"type": "input_tokens",

"value": 150000 # Auto-summarizes when tokens exceed 150K

}

}]

}

)When your conversation crosses 150K tokens, the API automatically summarizes the older parts. Your session just keeps going.

Bonus: Computer Use

Sonnet 4.6 scores 72.5% on OSWorld — near human-level for tasks like navigating spreadsheets and filling out multi-step web forms. The stat that blew my mind: zero hallucinated links. The previous Sonnet hallucinated about 1 in every 3 links during computer use. Zero now. Plus a major improvement in prompt injection resistance. If you're building any kind of browser automation, this is a massive upgrade.

The Evidence: Hard Numbers and Real Customer Feedback

Developer Preference

In Anthropic's own Claude Code testing:

- 70% of developers preferred Sonnet 4.6 over Sonnet 4.5

- 59% preferred it over Opus 4.5 (the previous flagship)

Developers said Sonnet 4.6 was "significantly less prone to overengineering," with fewer false claims of success, fewer hallucinations, and more consistent follow-through on multi-step tasks.

Agentic Coding: A Step Change

Sonnet 4.6 is +8.1 percentage points better at agentic terminal coding than Sonnet 4.5 (measured on Terminal-Bench 2.0 with thinking turned off). That is not an incremental improvement. That is a step change.

Real Customer Results

| Company | Result |

|---|---|

| Box | 15 percentage points over Sonnet 4.5 on heavy reasoning Q&A |

| Cognition | "Meaningfully closed the gap with Opus on bug detection" |

| Databricks | Matches Opus 4.6 on OfficeQA |

| Pace | 94% on insurance benchmark — highest for computer use |

The Cost Math at Scale

| Model | Input Cost | Output Cost | Per 1M Calls |

|---|---|---|---|

| Opus 4.6 | $5.00 | $12.50 | $17.50 |

| Sonnet 4.6 | $3.00 | $7.50 | $10.50 |

| You Save | $2.00 | $5.00 | $7.00 (40%) |

Based on ~1K input + 500 output tokens per call.

Scale that to 100 million calls a month — which is real production volume for many companies — and you're saving $700,000 per month. That's $8.4 million per year for the same output quality.

The Honest Caveat: When Opus Still Wins

I want to be transparent. Opus 4.6 is still the better model for some things:

- Deep architectural reasoning where getting it exactly right matters

- Agent Teams lead roles — Opus plans better at that level

- Complex cybersecurity analysis

- 128K max output — Sonnet maxes out at 64K

My Decision Framework

| Task | Recommended Model |

|---|---|

| Quick task (bug fix, small feature, Q&A) | Sonnet 4.6 ($3/$15) |

| Deep architectural reasoning | Opus 4.6 ($5/$25) |

| Multi-file refactor / agentic coding | Sonnet 4.6 (+8.1 pts better) |

| Agent Teams — lead role | Opus 4.6 (deep planning) |

| Agent Teams — team members | Sonnet 4.6 (execution) |

| High-volume production / batch | Sonnet + batch ($1.50/$7.50) |

| Computer use / browser automation | Sonnet 4.6 (72.5% OSWorld) |

| Speed for exploration | Haiku 4.5 ($0.80/$4.00) |

Bookmark this framework.

The Four Code Changes You Need to Make

Change 1: The One-Line Swap

# Before (Opus — $5/$25 per MTok)

response = client.messages.create(

model="claude-opus-4-5-20251101",

...

)

# After (Sonnet 4.6 — $3/$15 per MTok)

response = client.messages.create(

model="claude-sonnet-4-6",

...

)Same code. Same prompt. Same max tokens. The only thing that changes is your bill — it drops by 40%. This is the easiest optimization you will make all year.

Change 2: Adaptive Thinking

Replace "type": "enabled" + a blind budget_tokens with "type": "auto". The model now allocates its own thinking budget per task. Simple bug? Maybe 500 tokens of reasoning. Complex race condition? It might use 15,000. You're not guessing anymore.

Change 3: Context Compaction

Add the compact beta flag and set your trigger threshold. When your conversation crosses that threshold, the API automatically summarizes the older parts. Your session just keeps going. This used to be Opus-only. Now it works on Sonnet 4.6.

Change 4: Claude Code Model Switching

If you're using Claude Code in your terminal, Sonnet 4.6 is now the default model. But if you hit a hard architecture problem:

/model claude-opus-4-6 # Switch to Opus temporarily

# Solve the hard problem...

/model claude-sonnet-4-6 # Switch back and save money

/fast # Quick exploration tasksThink of it like driving: Sonnet is your daily commuter. Opus is the track car you pull out for special occasions.

The Bottom Line

Take whatever tasks you've been running on Opus and switch them to Sonnet 4.6 for one week. Just one week. I bet you won't switch back.

The benchmarks are nearly identical. The developer preference numbers speak for themselves. And the cost savings are real — from 40% on standard calls to 50%+ with batch processing.

Sonnet 4.6 isn't just "good enough." For most development tasks, it's actually better than what came before. Less overengineering. Fewer hallucinations. More consistent follow-through. And it costs less.

That's not a trade-off. That's a free upgrade.

Model IDs for Your Code

- Sonnet 4.6:

claude-sonnet-4-6 - Opus 4.6:

claude-opus-4-6 - Haiku 4.5:

claude-haiku-4-5-20251001

Sources

Related Articles

- Claude Code Tutorial: Complete Beginner's Guide (2026)

- Use Claude Code with Any AI Model — GPT, Gemini, DeepSeek

- Chrome DevTools MCP vs Claude in Chrome vs Playwright

Watch the full video breakdown on AyyazTech YouTube channel for live code demos of all four changes.

]]>